Editorial

Date

Key Takeaways for Applied Machine Learning, from 3D Modeling to Environmental Simulation

From July 13 to 19, ICML 2025 (International Conference on Machine Learning) brought together thousands of researchers and practitioners in Vancouver to explore the latest breakthroughs in artificial intelligence and machine learning. As one of the world’s most influential academic conferences in AI, ICML continues to shape the future of deep learning, foundation models, and applied systems across disciplines.

This year, the CDRIN (Centre de développement et de recherche en intelligence numérique)—a Quebec-based applied research center focused on AI, 3D technologies, and interactive systems—attended to assess where emerging innovations intersect with real-world challenges in industry and science.

For teams working on simulation, computer vision, and machine learning in production environments, ICML provided valuable insights into improving data quality, increasing model robustness, and reducing friction in R&D workflows.

In this recap, researchers Sherry Taheri and Olivier Leclerc share what stood out to them—with a particular lens on transferable knowledge, actionable tools, and the evolving landscape of applied AI in Quebec and beyond.

1. ICML 2025 featured many advances in adapting large language models to new tasks. What recent techniques stood out to you as most relevant for practitioners working with limited compute or on edge devices (such as parameter-efficient fine-tuning, model compression, or low-rank adaptation)?

Olivier Leclerc: I found the ParetoQ research particularly insightful. It explored optimal trade-offs between model size and accuracy, and their framework produced a ternary 600M-parameter model that outperformed a previous state-of-the-art 3B-parameter model.

The model achieves optimal accuracy at various quantization levels: 1-bit, 1.58-bit, 2-bit, 3-bit, 4-bit, making it highly adaptable. This kind of efficiency means that language models running directly on mobile devices are becoming both faster and more accurate. They even made available a MobileLLM release as proof of concept.

Sherry Taheri: I didn’t attend any talks specifically on efficiency, but I did meet the developers of Ollama, which I use frequently. What caught my attention regarding the first part of the question was research on how LLMs handle spatial understanding and track world state. I am working on a similar project right now, so seeing what others have discovered was really interesting.

2. Reinforcement learning (RL) was a major theme at ICML, particularly in robotics and autonomous agents. Which approaches looked most promising for improving real-world systems in automation, manufacturing, or vision-driven control?

Olivier : RL is typically trained in simulators where models can run endlessly, but in real-world applications, RL needs to extract as much information as possible from the limited examples it has.

I enjoyed discovering new ideas aimed at improving what researchers called “Optimal Sample Efficiency”, essentially, how much an agent can learn from fewer examples. I’m still new to RL, but the direction seems promising: better generalization from less data!

Two examples:

Another standout was a paper from MILA: “Network Sparsity Unlocks the Scaling Potential of Deep Reinforcement Learning.” It showed that introducing sparsity (disabled neurons or holes in the network) lets larger models scale effectively in RL. Previously, performance plateaued with larger architectures. That’s a big potential for future applications!

3. With your experience working with vison systems and 3D reconstruction, which ICML papers felt most applicable to your current challenges in this category?

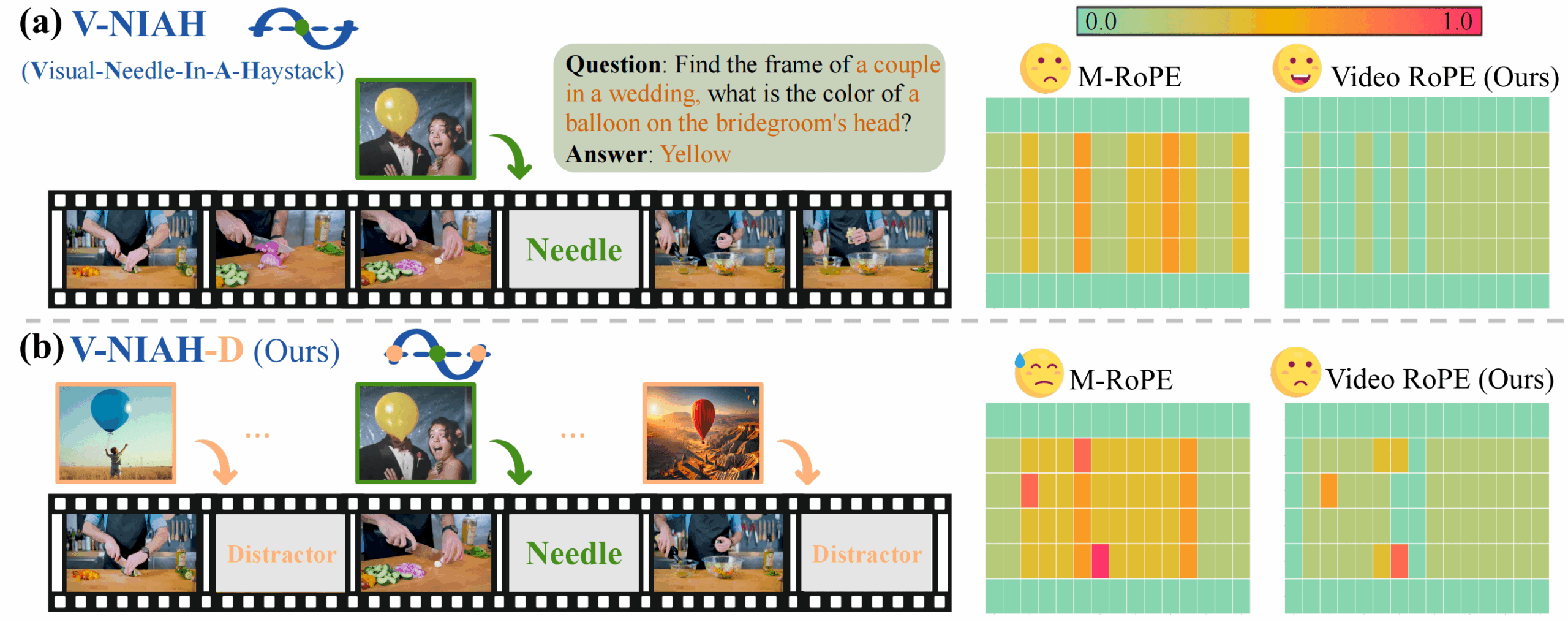

Olivier: VideoRoPE struck me as potentially very useful. It extends transformer models with both spatial (including 3D) and temporal understanding, enabling deeper reasoning over video content that was previously possible.

You could, for instance, ask something like “Find the frame of a couple at a wedding, what’s the colour of the balloon above the groom’s head?” Even if that detail appears only for a fraction of a second on screen, the model can extract and relate it.

4. ICML featured several advances in time series forecasting, for domains like climate and finance. Are these methods adaptable enough for real-world industry projects?

Olivier: Before the conference, I hadn’t realized how broadly applicable these foundation models for time series could be. The Sundial presentation surprised me: it is a foundation model for time-series that predicts the next values in any sequence without requiring additional training.

This single model could be used to weather data, stock markets, or many other types of sequential data. While I wouldn’t yet trust it enough for direct deployment in critical industry tasks, it could be to quickly generate plausible synthetic data to accelerate a project development.

Sherry: Yes. I’d also highlight the talk by Ana Lucic, who presented Aurora, a foundation model for Earth Systems, developed by Microsoft.

Modern Earth system forecasts rely on complex, resource-heavy models with limited adaptability and accuracy. Aurora is a large-scale foundation model which addresses these challenges of traditional forecasting (complexity, high resources consumption, and limited adaptability) by enabling anyone to generate practical Earth system predictions.

By fine-tuning the pre-trained model on downstream tasks such as atmospheric chemistry, air quality prediction, wave modeling, hurricane tracking, and weather forecasting, users can obtain tailored forecasts at much lower cost.

It’s an excellent example of how AI help reduce computational demand and developpement effort. Aurora’s pre-trained and fine-tuned model weights are available on Hugging Face.

The strong presence of trading firms at ICML also underscores AI’s growing importance in finance and algorithmic trading.

5. Model reliability remains a central concern. Which new approaches to calibration, bias mitigation and robustness did you find most actionable for applied ML teams working to build safe and trustworthy systems?

Sherry: I came across several interesting projects in areas like adversarial machine learning, federated learning, and differential privacy. Since I’m less familiar with those topics, I found myself more drawn to the posters that focused on bias and fairness.

One area that stood out was how current text-to-image models often misrepresent cultural details. This highlights the need for more culturally aware AI systems, as well as better evaluation frameworks. We must ensure that AI-generated content represents different cultures accurately, otherwise, it can perpetuate stereotypes or lead to misrepresentation.

One paper that captured this issue particularly well was “CulturalFrames: Assessing Cultural Expectation Alignment in Text-to-Image Models and Evaluation Metrics“.

6. Did you notice progress in areas like robust ML, real-time inference, or generalization that could reduce barriers to deploying ML in production—especially for smaller or mid-sized teams in games or applied R&D?

Sherry: To be honest, I didn’t see any research that felt immediately ready for production, especially given how results can sometimes be cherry-picked and require extensive validation.

That said, I believe any content that helps applied researchers and developers gain insight into the tools they already use is valuable. One example was the talk by Sergey Ioffe, the inventor of Batch Normalization. It’s something we rely on regularly, so hearing about its origin and evolution directly from the author was particularly insightful.

7. Several sessions focused on data-centric methods. Which developments do you think could shift how studios or research labs approach data acquisition and model training?

Olivier: A good candidate is “Outlier Gradient Analysis“. It shows a way to detect detrimental examples in the training dataset. In many cases, just a few of them can push the model in the wrong direction. Identifying and removing those examples can improve accuracy. I’ve seen this kind of issue in practice and dealt with it empirically, so it’s great to now have a more formal method.

Sherry: We often hear about the magic of having massive amounts of data, but more data isn’t always the best or only path to better models. As I’ve mentioned in the last question, adding more pre-training data can sometimes harm performance on downstream tasks.

In situations where high-quality data is not available, we can still make progress. The “Delta Learning Hypothesis” talk explored learning from the difference between weak and weaker data through preference learning.

So, it’s not just about scale, sometimes reframing the problem can lead to more efficient and effective model development.

8. Many CTOs are concerned about the challenges of data acquisition and annotation, especially for training advanced ML models. What best practices or tools presented at ICML could help streamline these processes for creative or scientific applications?

Olivier: One piece of research that caught my attention is “Improving the Scaling Laws of Synthetic Data with Deliberate Practice“. It’s a method for generating new synthetic data using a diffusion model, but with a structured process made to push the boundaries of the learning and explore the edge cases. This helps the model generalize better at test-time, so you get improved performance once deployed, without adding any real training data.

Sherry: There was a lot of great content on data curation this year at ICML, along with a strong presence of data-focused startups.

One talk I liked showed how we can use a base language model combined with an instruction tuned model to create synthetic data that’s both reliable and diverse.

Another interesting talk by the researcher James Zou explained how to curate medical reasoning data (an area where data is still lacking) using sources like clinical case reports, YouTube instructional videos, and Twitter/X discussions.

9. Maritime and environmental sciences face unique data and modeling challenges. Can you highlight how AI research showcased at ICML might help solve specific pain points in these fields, and whether there’s a valuable opportunity for tech transfer to the entertainment sector?

Olivier: Tracking individuals is a common need in both sectors, often with restrained training data. That’s why I found Efficient Track Anything interesting: it’s a zero-shot model, so it doesn’t require any training on the target data. One potential use is to generate better or more diverse training data for a smaller model if needed. The exact same approach could easily transfer to entertainment, like tracking players in sports analytics or characters in interactive games.

10. With the breadth of research subjects presented, have you seen any other discoveries you feel have potential for the future and are worth highlighting?

Olivier: One interesting concept I’m glad I stumbled upon is “Memorization Sinks: Isolating Memorization during LLM Training“. It could be a steppingstone toward the goal of favorizing generalization, which many of us are aiming for.. The idea is to turn on/off groups of neurons assigned to specific documents (training examples), encouraging memorization to “sink” into those neurons that are active only for this example, while general concepts are captured ” by those that remain active across tasks.

Sherry: One talk that really intrigued me was “When good data backfires: Questioning Common Wisdom in Data Curation”. The paper Overtrained Language Models Are Harder to Fine-Tune shows that while more pre-training data improves base models, it can harm downstream performance, a phenomenon called catastrophic overtraining. As pre-training data scales, the resulting models become more sensitive to noise, which can lead to worse outcomes after fine-tuning or quantization.